1. Quickstart

A Bayesian Belief Network (BBN) is defined as a pair (D, P), where

Dis a directed acylic graph (DAG), andPis a joint distribution over a set of variables corresponding to the nodes in the DAG.

Creating a reasoning model involves defining the D and P. The BBN is then converted into a secondary structure called join tree [HD99] for probabilistic, interventional and counterfactual queries [PGJ16].

1.1. Creating a model

1.1.1. Create the structure, DAG

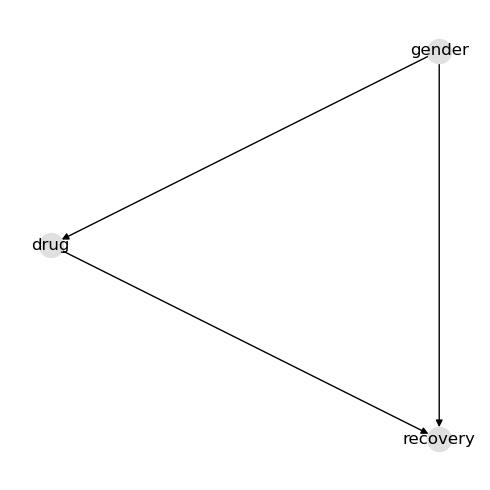

Simply define your structure using a dictionary. The nodes in this graph mean the following.

genderis male or femaledrugis whether the person/patient took the medicationrecoveryis whether the person recovered

In this example drug medication has a causal influence on treatment recovery. Gender has a causal influence drug medication and treatment recovery. These made up relationships represent the classic confounder example.

[1]:

d = {

"nodes": ["drug", "gender", "recovery"],

"edges": [["gender", "drug"], ["gender", "recovery"], ["drug", "recovery"]],

}

[2]:

from pybbn.associational import dict_to_graph

import networkx as nx

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(5, 5))

g = dict_to_graph(d)

pos = nx.nx_agraph.graphviz_layout(g, prog="dot")

nx.draw(g, pos=pos, with_labels=True, node_color="#e0e0e0")

fig.tight_layout()

1.1.2. Create the parameters, CPTs

The variables in the running examples are binary (they each have 2 values). The parameters (or local probability models) are conditional probability tables (CPTs). A CPT is defined for each node through dictionaries (inspired by Pandas split and records orientations).

[3]:

p = {

"gender": {

"columns": ["gender", "__p__"],

"data": [["male", 0.51], ["female", 0.49]],

},

"drug": {

"columns": ["gender", "drug", "__p__"],

"data": [

["female", "no", 0.23],

["female", "yes", 0.77],

["male", "no", 0.76],

["male", "yes", 0.24],

],

},

"recovery": {

"columns": ["gender", "drug", "recovery", "__p__"],

"data": [

["female", "no", "no", 0.31],

["female", "no", "yes", 0.69],

["female", "yes", "no", 0.27],

["female", "yes", "yes", 0.73],

["male", "no", "no", 0.13],

["male", "no", "yes", 0.87],

["male", "yes", "no", 0.07],

["male", "yes", "yes", 0.93],

],

},

}

1.1.3. Create the model

We use the create_reasoning_model() convenience method to create an inference model.

[4]:

from pybbn.factory import create_reasoning_model

model = create_reasoning_model(d, p)

1.2. Associational query

Associational queries are probabilistic queries. Associational queries can be executed with different types of evidence. You can also execute associational queries with a mixture of different types of evidences.

1.2.1. Query without evidence

We can query the model without any evidence as follows. The posteriors come back as Pandas dataframes.

[5]:

q = model.pquery()

[6]:

q["gender"]

[6]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.49 |

| 1 | male | 0.51 |

[7]:

q["drug"]

[7]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.5003 |

| 1 | yes | 0.4997 |

[8]:

q["recovery"]

[8]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.195764 |

| 1 | yes | 0.804236 |

1.2.2. Query with observation evidence

Arguably, observation evidence is the most common type of evidence. Observation evidences is such that only one value set to 1 and the rest are set to 0’s. We can query the model with observation evidence as follows.

[9]:

evidences = {"gender": model.create_observation_evidences("gender", "male")}

q = model.pquery(evidences=evidences)

[10]:

q["gender"]

[10]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.0 |

| 1 | male | 1.0 |

[11]:

q["drug"]

[11]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.76 |

| 1 | yes | 0.24 |

[12]:

q["recovery"]

[12]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.1156 |

| 1 | yes | 0.8844 |

1.2.3. Query with observation evidence, shortcut

There is a shortcut version to creating observation evidence to alleviate the verbose approach above.

[13]:

q = model.pquery(evidences=model.e({"gender": "male"}))

[14]:

q["gender"]

[14]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.0 |

| 1 | male | 1.0 |

[15]:

q["drug"]

[15]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.76 |

| 1 | yes | 0.24 |

[16]:

q["recovery"]

[16]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.1156 |

| 1 | yes | 0.8844 |

1.2.4. Query with finding evidence

Finding evidence can only be either \(\{0, 1\}\) and generalizes observation evidence. At least one value must be set to 1, however (or there will be a division by zero issue). The difference with observation evidence is that finding evidence can have multiple values set to 1.

[17]:

evidences = {"gender": model.create_finding_evidences("gender", [1, 0], ["male", "female"])}

q = model.pquery(evidences=evidences)

[18]:

q["gender"]

[18]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.0 |

| 1 | male | 1.0 |

[19]:

q["drug"]

[19]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.76 |

| 1 | yes | 0.24 |

[20]:

q["recovery"]

[20]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.1156 |

| 1 | yes | 0.8844 |

1.2.5. Query with virtual evidence

Virtual evidence is the most general form of evidence (generalizing both observational and finding evidence types). Virtual evidence has all values in the range \([0, 1]\).

[21]:

evidences = {"gender": model.create_virtual_evidences("gender", [0.01, 0.99], ["male", "female"])}

q = model.pquery(evidences=evidences)

[22]:

q["gender"]

[22]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.989596 |

| 1 | male | 0.010404 |

[23]:

q["drug"]

[23]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.235514 |

| 1 | yes | 0.764486 |

[24]:

q["recovery"]

[24]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.277498 |

| 1 | yes | 0.722502 |

1.2.6. Query with mixed types of evidence

Here, we show how to issue an associational query with mixed types of evidences.

[25]:

evidences = {

"gender": model.create_observation_evidences("gender", "male"),

"drug": model.create_virtual_evidences("drug", [0.60, 0.40], ["yes", "no"]),

}

q = model.pquery(evidences=evidences)

[26]:

q["gender"]

[26]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.0 |

| 1 | male | 1.0 |

[27]:

q["drug"]

[27]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.678571 |

| 1 | yes | 0.321429 |

[28]:

q["recovery"]

[28]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.110714 |

| 1 | yes | 0.889286 |

1.3. Interventional query

To estimate the causal effects, we can apply the do operator [PGJ16]. For brevity, in the running example, denote the following.

\(G\) is gender

\(D\) is drug

\(R\) is recovery

The (backdoor) adjustment formula is defined as follows.

\(P(R=r|\mathrm{do}(D=d)) = P(R=r|D=d, G=g) P(G=g)\)

We can estimate the causal effects separately.

\(P(R=\mathrm{yes}|\mathrm{do}(D=\mathrm{yes})) = P(R=\mathrm{yes}|D=\mathrm{yes}, G=g) P(G=g)\)

\(P(R=\mathrm{yes}|\mathrm{do}(D=\mathrm{no})) = P(R=\mathrm{yes}|D=\mathrm{no}, G=g) P(G=g)\)

The average causal effect (ACE) can then be computed as follows.

\(\mathrm{ACE} = P(R=\mathrm{yes}|\mathrm{do}(D=\mathrm{yes})) - P(R=\mathrm{yes}|\mathrm{do}(D=\mathrm{no}))\)

[29]:

p_yes = model.iquery(Y=["recovery"], y=["yes"], X=["drug"], x=["yes"])

p_yes

[29]:

recovery 0.832

dtype: float64

[30]:

p_no = model.iquery(Y=["recovery"], y=["yes"], X=["drug"], x=["no"])

p_no

[30]:

recovery 0.7818

dtype: float64

The interpretation of the result below is that taking the drug causally increases the chances of recovery by 5%.

[31]:

p_yes["recovery"] - p_no["recovery"]

[31]:

0.05020000000000002

1.4. Counterfactual query

In this example, we want to compute the counterfactual: Given that a male patient did not take the drug and did not recover, what would the probability of recovery be had the patient taken the drug?

The evidence is that the patient is male, did not take the drug and did not recover. The evidence is the factual (it actually did happen).

\(G=\mathrm{male}\)

\(D=\mathrm{no}\)

\(R=\mathrm{no}\)

The hypothetical is had the patient taken the drug. The hypothetical is the counterfactual.

\(D^*=\mathrm{yes}\)

The probability of interest is recovery in the counterfactual.

\(P_{d'}(R | G=g, D=d)\)

[32]:

Y = "recovery"

e = {"gender": "male", "drug": "no", "recovery": "no"}

h = {"drug": "yes"}

The probability of recovery for the counterfactual is 78%.

[33]:

model.cquery(Y, e, h)

[33]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.173882 |

| 1 | yes | 0.826118 |

1.5. Graphical query

Below are some examples of graphical queries.

1.5.1. d-separation and conditional independence

Querying if two nodes are d-separated is possible [Pea18].

[34]:

model.is_d_separated("drug", "recovery")

[34]:

False

[35]:

model.is_d_separated("drug", "recovery", {"gender"})

[35]:

False

1.5.2. Confounders and backdoors

We can query for the minimal set of confounders between two nodes [PGJ16].

[36]:

model.get_minimal_confounders("drug", "recovery")

[36]:

['gender']

1.5.3. Mediators and frontdoors

We can query for the minimal set of mediators between two nodes [PGJ16]. In this running example, there are no frontdoors.

[37]:

model.get_minimal_mediators("drug", "recovery")

[37]:

[]

1.6. Data sampling

Sampling is done through logic sampling [Hen88]. If evidence is provided, then sampling with rejection is performed.

[38]:

sample_df = model.sample(max_samples=1_000)

sample_df.shape

[38]:

(1000, 3)

[39]:

sample_df.head()

[39]:

| gender | drug | recovery | |

|---|---|---|---|

| 0 | female | yes | no |

| 1 | female | yes | yes |

| 2 | male | no | yes |

| 3 | female | yes | yes |

| 4 | male | yes | yes |

1.7. Serde

Saving and loading the model is easy.

1.7.1. Serialization

To persist the model, use model_to_dict() to create a Python dictionary and then serialize the dictionary as JSON data.

[40]:

import json

import tempfile

from pybbn.serde import model_to_dict

data1 = model_to_dict(model)

with tempfile.NamedTemporaryFile(mode="w", delete=False) as fp:

json.dump(data1, fp)

file_path = fp.name

print(f"{file_path=}")

file_path='/var/folders/vt/g8zbc68n2nj8dkk85n8b19440000gn/T/tmp71xwe3g4'

1.7.2. Deserialization

To depersist the model, use the json module to deserialize the dictionary, and then use dict_to_model() to recreate the model.

[41]:

from pybbn.serde import dict_to_model

with open(file_path, "r") as fp:

data2 = json.load(fp)

model2 = dict_to_model(data2)

[42]:

q = model2.pquery()

[43]:

q["gender"]

[43]:

| gender | __p__ | |

|---|---|---|

| 0 | female | 0.49 |

| 1 | male | 0.51 |

[44]:

q["drug"]

[44]:

| drug | __p__ | |

|---|---|---|

| 0 | no | 0.5003 |

| 1 | yes | 0.4997 |

[45]:

q["recovery"]

[45]:

| recovery | __p__ | |

|---|---|---|

| 0 | no | 0.195764 |

| 1 | yes | 0.804236 |